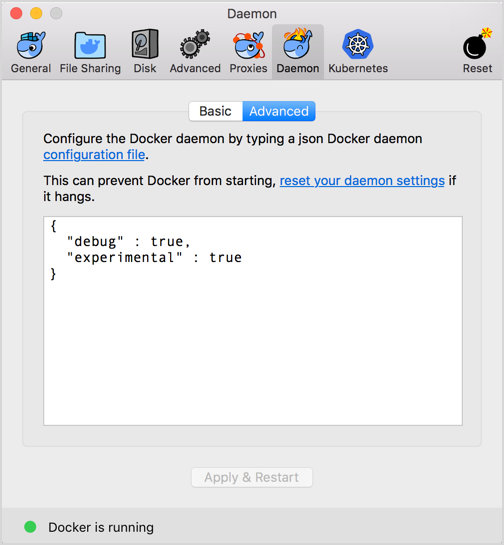

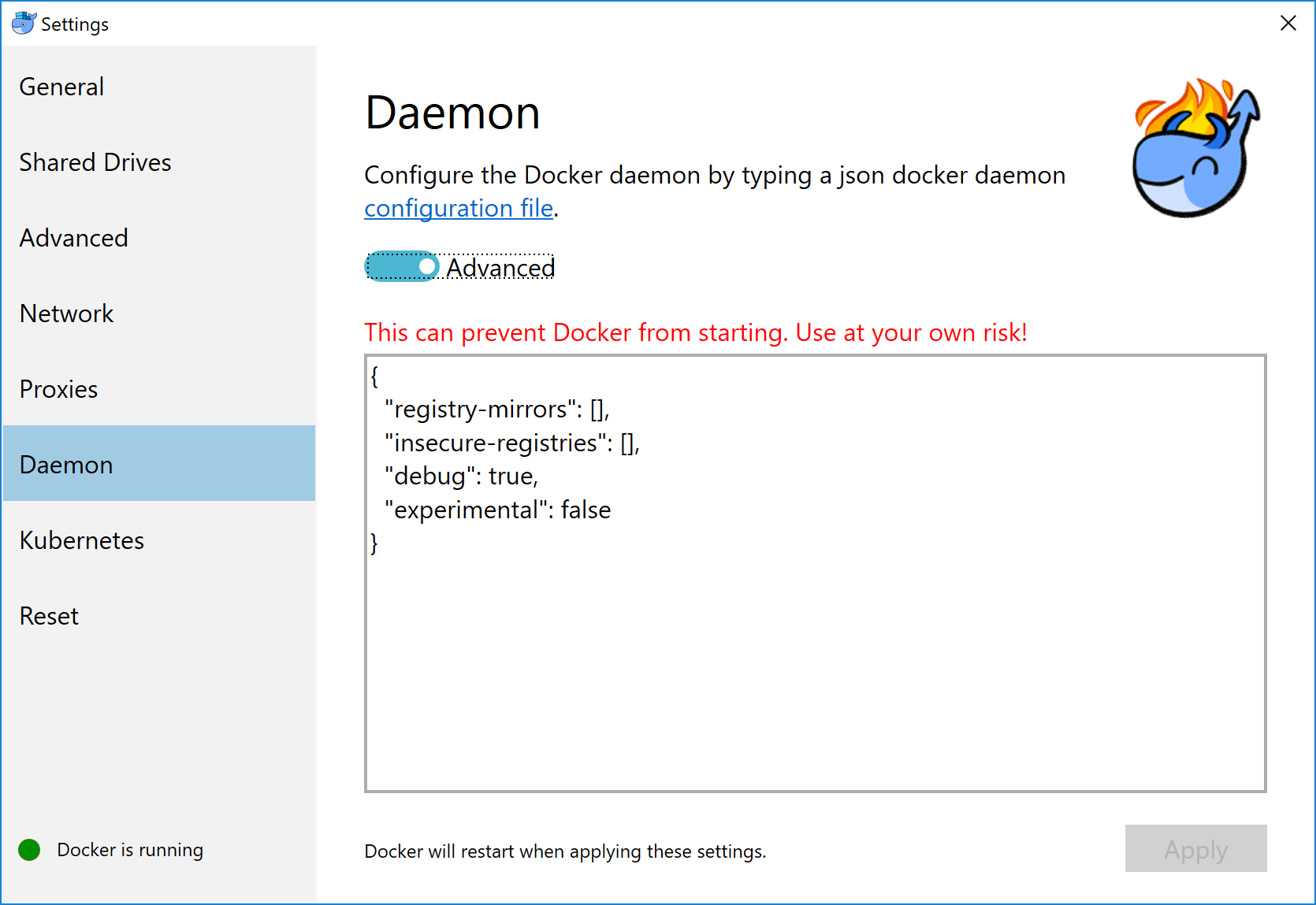

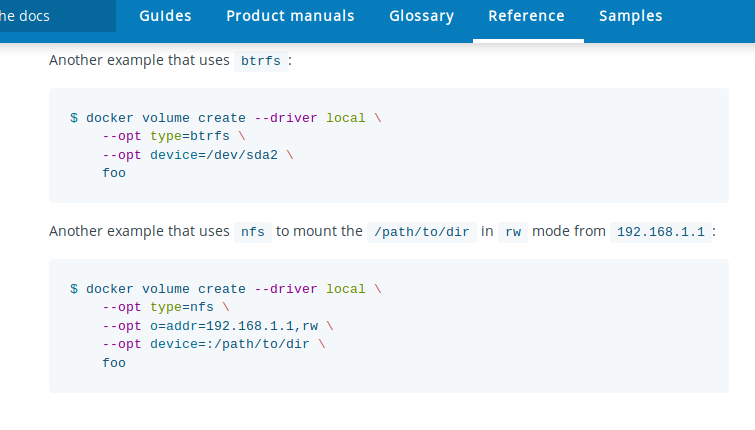

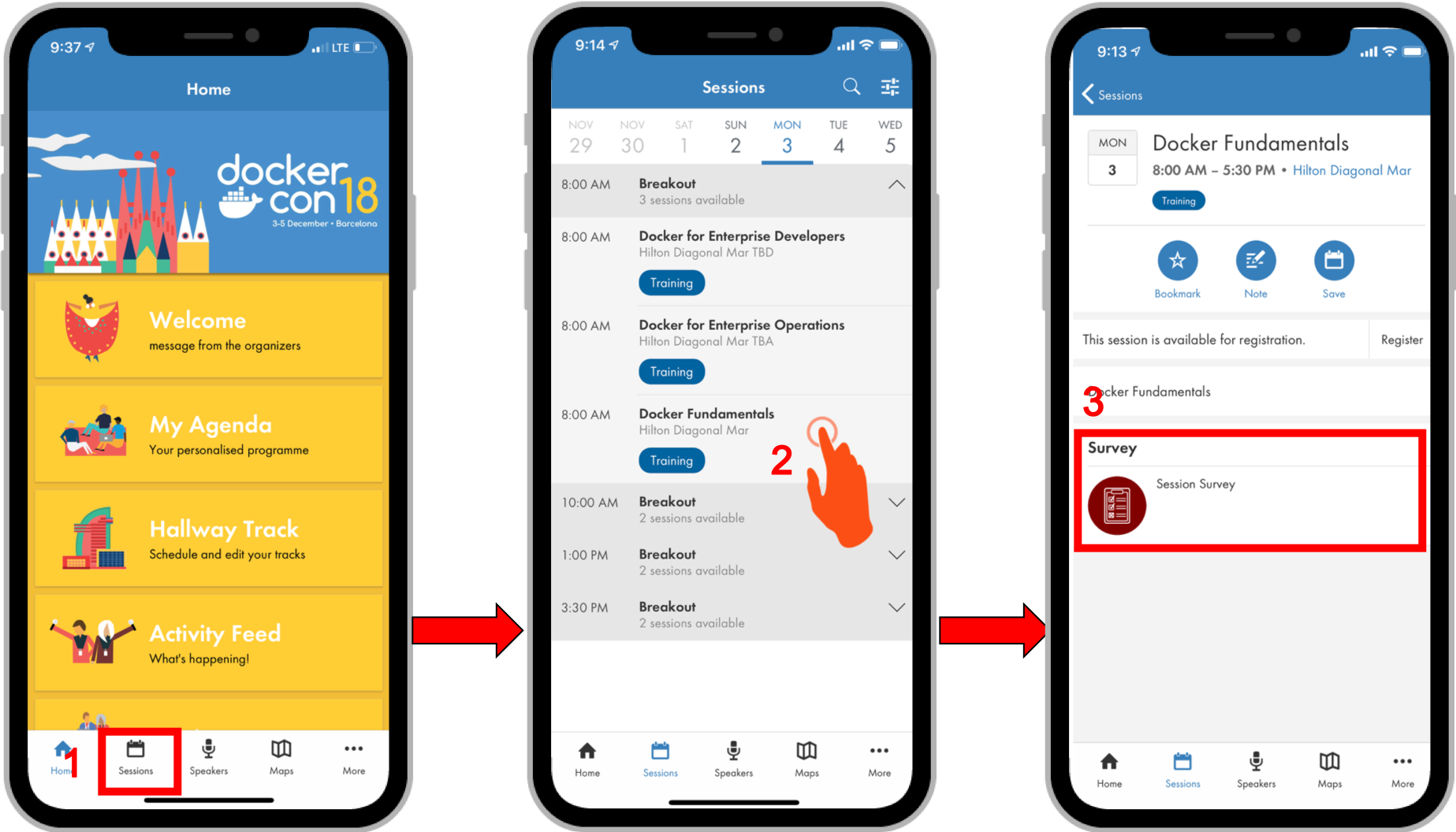

name: inverse layout: true class: center, middle, inverse --- name: impact layout: true class: center, middle, impact --- name: centered layout: true class: center, middle --- layout: false template: impact name: agenda # Agenda .content[.align-left[ .left-column[ - [Pruning](#prune) - [Cleaning Logs + YAML](#logs) - [Network Address Pools](#address-pools) - [Netshoot](#netshoot) ] .right-column[ - [Layers](#layers) - [Buildkit](#buildkit) - [Local Volume Driver](#volume-local) - [Fixing Permissions](#fix-perms) ] ]] --- layout: false class: title name: title .content[ # Tips and Tricks<br>From A Docker Captain .left-column[ .pic-circle-70[] ] .right-column[.v-align-mid[.no-bullets[ <br> - Brandon Mitchell - Twitter: @sudo_bmitch - GitHub: sudo-bmitch ]]] ] ??? - My twitter and github handles are what any self respecting sysadmin does when you get a permission denied error on your favorite username. - This presentation is on github and I'll have a link to it at the end, I'll be going fast so don't panic if you miss a slide. --- ```no-highlight $ whoami - Solutions Architect @ BoxBoat - Docker Captain - Frequenter of StackOverflow ``` .align-center[ .pic-30[] .pic-30[] .pic-30[] ] ??? - BoxBoat is a Docker Business Partner that provides consulting services around containers - That's my mandatory plug so I can get my expense report reimbursed - Docker Captains are the technical community members that also love spreading that knowledge. - I joined the Captains program after answering way too many StackOverflow questions. - I've answered well over 1k questions and gave a lightening talk at DC US on many of the common questions that is also up on github. --- template: inverse # Who is a Developer? ??? - Show of hands... I'm feeling a little outnumbered. - You're in the right place... but I snuck in here from the Ops side. - Job of a good DevOps is to automate themselves out of a job, my goal today is to automate myself out of several hundred jobs simultaneously. - (Don't tell my boss.) --- name: prune template: impact .content[ # Ops 101 - Full Harddrive ] ??? - T: 2:00, [Skip to Cleaning Logs](#logs) --- # Prune ```no-highlight *$ docker system prune WARNING! This will remove: - all stopped containers - all networks not used by at least one container - all dangling images - all build cache ``` ??? - Docker introduced this a while back, and it's the first step - Some run this and complain that their drives are still full -- What this doesn't clean by default: - Running containers (and their logs) - Tagged images - Volumes --- # Prune - YOLO ```no-highlight $ docker run -d --restart=unless-stopped --name cleanup \ -v /var/run/docker.sock:/var/run/docker.sock docker /bin/sh -c \ "while true; do docker system prune -f; sleep 1h; done" ``` ??? - We now know how to do the job of an Ops, lets automate that job away - I call this YOLO for a reason, there's a large risk to deleting something you didn't want to - Be careful since this removes stopped containers and untagged images - I've had it delete DTR containers that didn't restart automatically - Untagged images includes your build cache -- ```no-highlight $ docker service create --mode global --name cleanup \ --mount type=bind,src=/var/run/docker.sock,\ dst=/var/run/docker.sock \ docker /bin/sh -c \ "while true; do docker system prune -f; sleep 1h; done" ``` ??? - We can do the same with a swarm service scheduled on every node in the cluster. - Note the mount flag here got split over two lines, that's one long option --- name: logs # Clean Your Logs - Docker logs to per container json files by default, without any limits - Rotating yourself could break that json formatting - Luckily "without any limits" is just the default... we can change that ??? - T: 5:00, [Skip to Network Address Pools](#address-pools) - Anyone here ever write a multi-threaded app and forget to lock the shared data before you modify it? --- # Clean Your Logs ```no-highlight $ docker container run \ * --log-opt max-size=10m --log-opt max-file=3 \ ... ``` ??? - Max size is fairly self explanatory, 10m = 10 megs - Max file is the number of log files docker will keep, when the limit is reached per log, docker starts a new empty log, and if there are now more than 3 files, the oldest logs are deleted - This means you can have between 20m-30m of logs with these settings - You can adjust the config when you run a container... but we don't run containers like this when deploying apps, there's a compose file... -- ```no-highlight $ cat docker-compose.yml version: '3.7' services: app: image: your_app * logging: * options: * max-size: "10m" * max-file: "3" ``` ??? - That's a lot of typing to do per service the compose file. What if we had a dozen services? --- # Clean Your Logs ```no-highlight version: '3.7' *x-default-opts: &default-opts logging: options: max-size: "10m" max-file: "3" services: app_a: * <<: *default-opts image: your_app_a app_b: * <<: *default-opts image: your_app_b ``` ??? - Docker added extension fields in 3.4. That's the `x-*` at the top level - Yaml always had an anchor/alias syntax - `&default-opts` is an anchor - `*default-opts` is an alias - `<<` merges in a set of keys from the alias - Hopefully many of you are thinking about how to use this for more than just logs, repetition inside docker-compose.yml files happens a lot, and we have the tools to make them DRY - The other reason I hope your thinking about how to use this in different ways is because we don't need this for logging... we can change docker's default behavior... --- # Clean Your Logs ```no-highlight $ cat /etc/docker/daemon.json { "log-opts": {"max-size": "10m", "max-file": "3"} } $ systemctl reload docker ``` ??? - Set the new default for every container run on this host going forward - Does not effect already running containers - Can be overridden per container --- .center[.pic-80[]] ??? - This isn't just advice for the Linux server admins, you can configure the daemon.json file on MacOS --- .center[.pic-80[]] ??? - And windows users have the same option, Daemon -> Advanced --- template: impact .content[ # Networking ] ??? - Now that I've just worked myself out of a couple hundred jobs, who's hiring? - This isn't the hallway track - Though I will have a hallway track session after this --- name: address-pools # Subnet Collisions - Docker networks sometimes conflict with other networks ??? - T: 10:00 [Skip to Netshoot](#netshoot) - This happens especially when our laptops are moving, coffee shop, connecting to VPN's, or in prod where docker gets connected to the rest of the network after passing all the compliance tests. -- - Originally we had the BIP setting ```no-highlight $ cat /etc/docker/daemon.json { "bip": "10.15.0.1/24" } ``` ??? - The "bip" controls the default bridge network named "bridge" - Containers not attached to a specific network default here - But most of us create networks for our containers, and those networks get their own IP's, how do we define their subnets?... --- # Subnet Collisions - Default address poll added in 18.06 ```no-highlight $ cat /etc/docker/daemon.json { "bip": "10.15.0.0/24", "default-address-pools": [ {"base": "10.20.0.0/16", "size": 24}, {"base": "10.40.0.0/16", "size": 24} ] } ``` ??? - The default address pool controls new networks you create dynamically - Without this you'd need to manage the subnets yourself - This is also being added to `docker swarm` commands for overlay networks... --- # Subnet Collisions ```no-highlight $ docker swarm init --help ... --default-addr-pool ipNetSlice --default-addr-pool-mask-length uint32 ``` ??? - This was just added in 18.09 - Swarm mode has these options when you init the swarm -- ```no-highlight $ docker swarm init \ --default-addr-pool 10.20.0.0/16 \ --default-addr-pool 10.40.0.0/16 \ --default-addr-pool-mask-length 24 ``` ??? - To set more than one pool, pass the flag multiple times - I have an open PR to get these modifiable with `docker swarm update` --- name: netshoot # Network Debugging - Networks in docker come in a few flavors: bridge, overlay, host, none - You can also configure the network namespace to be another container ??? - T: 13:30, [Skip to Layers](#layers) - This next tip is an old one but well worth repeating - K8s people know this as pod networking -- ```no-highlight $ docker run --name web-app -p 9080:80 -d nginx *$ docker run -it --rm --net container:web-app \ nicolaka/netshoot ss -lnt State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 *:80 *:* ``` ??? - Lets start an nginx container and debug it - Nicolaka, Nicola Kabar, is a docker employee that put together this networking troubleshooter container, it contains loads of common tools - The `ss` command here is the replacement for `netstat`, we're showing that inside the network namespace for the nginx container, there is something listening on port 80 --- # Network Debugging ```no-highlight $ docker run -it --rm --net container:web-app \ * nicolaka/netshoot tcpdump -n port 80 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes ``` ??? - We can do more than just `ss`, here's an example of tcpdump -- ```no-highlight $ curl localhost:9080 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> ... ``` ??? - If we curl the port from another shell on the host, we should see traffic in that container... --- # Network Debugging ```no-highlight $ docker run -it --rm --net container:web-app \ nicolaka/netshoot tcpdump -n port 80 14:08:58.878822 IP 172.17.0.1.55194 > 172.17.0.2.80: Flags [S],... 14:08:58.878848 IP 172.17.0.2.80 > 172.17.0.1.55194: Flags [S.],.. 14:08:58.878872 IP 172.17.0.1.55194 > 172.17.0.2.80: Flags [.],... 14:08:58.879089 IP 172.17.0.1.55194 > 172.17.0.2.80: Flags [P.],.. 14:08:58.879110 IP 172.17.0.2.80 > 172.17.0.1.55194: Flags [.],... 14:08:58.879208 IP 172.17.0.2.80 > 172.17.0.1.55194: Flags [P.],.. 14:08:58.879238 IP 172.17.0.1.55194 > 172.17.0.2.80: Flags [.],... 14:08:58.879267 IP 172.17.0.2.80 > 172.17.0.1.55194: Flags [P.],.. 14:08:58.879285 IP 172.17.0.1.55194 > 172.17.0.2.80: Flags [.],... 14:08:58.879695 IP 172.17.0.1.55194 > 172.17.0.2.80: Flags [F.],.. 14:08:58.879739 IP 172.17.0.2.80 > 172.17.0.1.55194: Flags [F.],.. 14:08:58.879776 IP 172.17.0.1.55194 > 172.17.0.2.80: Flags [.],... ``` ??? - And looking back at tcpdump, we see the traffic hitting the container - We did not need to install any debugging code inside the nginx container - We can also use this to test connections between containers over docker networking, e.g. ping, curl, etc, as one container talking to another, to know if the issue is our application or our network configuration --- name: layers template: impact .content[ # Layers and Volumes ] ??? - T: 16:30, [Skip to Buildkit](#buildkit) --- # Understanding Layers ```no-highlight $ docker image inspect localhost:5000/jenkins-docker:latest \ --format '{{json .RootFS.Layers}}' | jq . [ * "sha256:b28ef0b6fef80faa25436bec0a1375214d9a23a91e9b75975bb...", ... "sha256:08794ff8753b0fbca869a7ece2dff463cdb7cffd5d7ce792ec0...", "sha256:37986c5c5dff18257b9a12a19801828a80aea036992b34d35a3...", * "sha256:34bb0412a3f6c0f3684e05fcd0a301dc999510511c3206d8cd3...", "sha256:696245ae585527c34e2cbc0d01d333aa104693e12e0b79cf193...", "sha256:91b63ceb91a75edb481c1ef8b005f9a55aa39d57ac6cc6ef490...", "sha256:afddea070d31e748730901215d11b546f4f212114e38e685465...", "sha256:0c05256b3bb44190557669126bf69897c7faf7628ff1ed2e2d4...", "sha256:0c05256b3bb44190557669126bf69897c7faf7628ff1ed2e2d4..." ] ``` ??? - You can see the layers when you inspect an image, here I'm looking at just the ".RootFS.Layers" section of the inspect - There's a lot more output than this, but I've truncated and highlighted two lines, b28, and 34b. - These are the layers of an image that I've built myself. - What happens if we look at the layers of my parent image, "jenkins/jenkins:lts"... --- # Understanding Layers ```no-highlight $ docker image inspect jenkins/jenkins:lts \ --format '{{json .RootFS.Layers}}' | jq . [ * "sha256:b28ef0b6fef80faa25436bec0a1375214d9a23a91e9b75975bb...", ... "sha256:08794ff8753b0fbca869a7ece2dff463cdb7cffd5d7ce792ec0...", "sha256:37986c5c5dff18257b9a12a19801828a80aea036992b34d35a3...", * "sha256:34bb0412a3f6c0f3684e05fcd0a301dc999510511c3206d8cd3..." ] ``` ??? - We see the same b28 and 34b layers in the "jenkins/jenkins:lts" image. - With layers we don't copy bytes, instead we have multiple pointers to the same slices of filesystem. - Docker is storing them by their unique hash. - But what do these layers contain, how can we see the commands being run?... --- # Understanding Layers ```no-highlight *$ docker image history localhost:5000/jenkins-docker:latest IMAGE CREATED CREATED BY SIZE COMMENT 6ca185e69f2e 292 years ago LABEL org.label-schema 0B buildkit <missing> 292 years ago HEALTHCHECK &{["CMD-SH 0B buildkit <missing> 292 years ago ENTRYPOINT ["/entrypoi 0B buildkit <missing> 3 weeks ago COPY entrypoint.sh /en 1.08kB buildkit <missing> 3 weeks ago RUN |2 GOSU_VERSION=1. 203MB buildkit <missing> 3 weeks ago RUN /bin/sh -c apt-get 83.6MB buildkit <missing> 292 years ago USER root 0B buildkit <missing> 6 weeks ago /bin/sh -c #(nop) COPY 6.11kB <missing> 6 weeks ago /bin/sh -c #(nop) USER 0B <missing> 6 weeks ago /bin/sh -c #(nop) EXPO 0B <missing> 7 weeks ago /bin/sh -c apt-get upd 2.21MB <missing> 7 weeks ago /bin/sh -c #(nop) ADD 101MB ``` ??? - You can view layers with the `docker image history` command which is almost as old as docker itself - In there you can see each command run, each bit of metadata set - You get a disk space per layer to see which commands need to be optimized - And you can also see that the new layers were created with buildkit while the parent image layers were built without --- # Understanding Layers ```no-highlight *$ DOCKER_BUILDKIT=0 docker build --no-cache --rm=false . Sending build context to Docker daemon 146.4kB ... Step 5/17 : RUN apt-get update && DEBIAN_FRONTEND=noninteracti... * ---> Running in 1fc215ebb603 ... ---> d6dff86e8b89 Step 9/17 : RUN curl -fsSL https://download.docker.com/linux/de... * ---> Running in a7a3a942a617 ... ---> a241c22525d8 ... Successfully built b01e4c46a2bf ``` ??? - Normally when you build, you let docker remove the intermediate containers - We can build with the `--rm=false` option and keep the containers around - The `Running in` lines show each of the container id's - Usually you would see a "removing intermediate container" at the end of each step, but that's what we turned off - So what do a bunch of exited containers get us?... --- # Understanding Layers ```no-highlight *$ docker container diff 1fc215ebb603 C /etc A /etc/python3.5 A /etc/python3.5/sitecustomize.py ... C /usr/bin A /usr/bin/pygettext3 A /usr/bin/helpztags A /usr/bin/python3 A /usr/bin/rvim A /usr/bin/view A /usr/bin/python3.5 ... ``` ??? - We can use `docker diff` to show exactly what has changed inside of a container, and these changes are what docker packages into the image layer. - Take note of the first character. - A: Add (more bytes) - C: Change (wasted bytes) - D: Delete (saves nothing, only a filesystem marker in the next layer) - In this case, "C" on a directory is not bad, it just means we added files into the directory and the definition of the folder changed. --- # Understanding Layers - If you create a temporary file in a step, delete it in that same step - Look for unexpected changes that trigger a copy-on-write, e.g. timestamps - Do your dirty work in early stages of a multi-stage build ??? - `chmod` and `chown` used to trigger CoW even without permission/owner changes - Docker changed that in 18.06 for overlay2 - For diff, Docker looks at mode, uid, gid, rdev (special file, device with mknod) - And if not a directory, it also checks: mtime and size - Multi-stage lets you be very cache wasteful during the compile of your code while still shipping only the resulting binary in the final image - A messy cache full of compilers and source is useful to speed up the next build --- # Merge RUN Commands ```no-highlight RUN apt-get update RUN apt-get install -y curl RUN rm -rf /var/lib/apt/lists/* ``` ??? - Merging multiple RUN commands is easy... -- ```no-highlight RUN apt-get update \ && apt-get install -y curl \ && rm -rf /var/lib/apt/lists/* ``` ??? - Just escape the line feed and join the commands with `&&` --- # Use Multi-Stage ```no-highlight *FROM openjdk:jdk as build RUN apt-get update \ && apt-get install -y maven COPY code /code RUN mvn build *FROM openjdk:jre as final *COPY --from build /code/app.jar /app.jar ENTRYPOINT ["java", "-jar", "/app.jar"] ``` ??? - Multi-stage lets us have a container with the full JDK, add in our tools like Maven, copy our code into the image, and build it, and not have any of that in the final shipped image. - The final image is just minimum needed to run the image rather than build it, so just the JDK and copying the JAR file from the previous stage. - Builds are faster since we only install the development tools once and then get them from the cache in later builds. - And the resulting image is smaller. - So multi-stage is awesome! ... --- template: inverse .title-content[ # "Hold my beer."<br><br> --BuildKit ] --- name: buildkit # BuildKit is Awesome ```no-highlight *# syntax = tonistiigi/dockerfile:runmount20180607 FROM openjdk:jdk as build RUN apt-get update \ && apt-get install -y maven *RUN --mount=type=bind,target=/code,source=code \ * --mount=type=cache,target=/root/.m2 \ * mvn build FROM openjdk:jre as final COPY --from build /output/app.jar /app.jar ENTRYPOINT ["java", "-jar", "/app.jar"] ``` ??? - T: 23:30, [Skip to Local Volume Driver](#volume-local) - What's going on here? Weird comment, `--mount`, and where's COPY? - The first comment isn't a comment, it's a parser directive - The parser for this dockerfile is an image that we downloaded - If we build this on a buildkit host that didn't have the right parser, it would be downloaded without having to upgrade the docker engine - The `--mount` syntax - Comes from the `dockefile:runmount` image - There are two possible types, bind and cache - Bind is a mount to the build context, it will be read-only, no data is copied - The cache is a effectively a named volume managed by buildkit - Read-only code isn't very useful, probably still do multi-stage - But the cache will save a lot of time on builds, bind is useful for large data files --- # BuildKit is Awesome ```no-highlight # syntax = docker/dockerfile:experimental FROM python:3 RUN pip install awscli *RUN --mount=type=secret,id=aws,target=/root/.aws/credentials \ aws s3 cp s3://... ... ``` ```no-highlight *$ docker build --secret id=aws,src=$HOME/.aws/credentials \ -t s3-app . ``` ??? - BuildKit does secrets, mounting a file from your host into the container - Now we can connect to secure sources without leaving those creds in the image --- # BuildKit is Awesome ```no-highlight $ export DOCKER_BUILDKIT=1 $ docker build -t your_image . ``` ??? - To run BuildKit, you just export an environment variable and build like normal -- ```no-highlight $ cat /etc/docker/daemon.json { "features": {"buildkit": true} } ``` ??? - Or to make BuildKit the new default, you can configure the daemon.json with the above "features" setting - BuildKit does a lot more than this, checkout video of Tibor and Sebastiaan's talk from yesterday, or there's also a Black Belt talk happening right now --- template: impact .content[ # Volumes ] ??? - I had a second talk submitted that didn't make the cut that was all about volumes. So with what time we have left, lets see how we can make developer's lives easier with volumes. --- name: volume-local # Local Volume Driver .center[.pic-80[]] ??? - T: 28:00, [Skip to Fixing Permissions](#fix-perms) - From Docker's documentation, we have steps to mount things like btrfs and nfs with the local volume driver - Nice thing is that this works out of the box, no 3rd party driver install required - Looking at the syntax, it's very similar to the mount command - The mount command is mostly a frontend to the mount syscall - The local volume driver is also mostly a pass through to the mount syscall - With nfs, you typically pass a device "addr:/path" to the command, vs the syscall which passes a device ":/path" with an option "addr" - To run a mount syscall, we need a type, source device, options, and target - With NFS, we can create a volume with better options than just this example... --- # NFS Mounts ```no-highlight version: '3.7' volumes: nfs-data: driver: local driver_opts: type: nfs o: nfsvers=4,addr=nfs.example.com,rw device: ":/path/to/dir" services: app: volumes: - nfs-data:/data ... ``` ??? - Here is the compose file to create an NFS mount with the local volume driver - The type is NFS, options are NFS specific plus a RW flag - Device is the path on the remote server - This is all you need to run an HA service in swarm with persistent data if you have HA storage available over NFS - What else can we mount?... --- # Other Filesystem Mounts ```no-highlight version: '3.7' volumes: ext-data: driver: local driver_opts: type: ext4 o: ro device: "/dev/sdb1" services: app: volumes: - ext-data:/data ... ``` ??? - If you have data on an ext4 or other drive, mount it directly into the container without first mounting it on the host - Options let you make it read-only --- # Overlay Filesystem as a Volume ```no-highlight version: '3.7' volumes: overlay-data: driver: local driver_opts: type: overlay device: overlay o: lowerdir=${PWD}/data2:${PWD}/data1,\ upperdir=${PWD}/upper,workdir=${PWD}/workdir services: app: volumes: - overlay-data:/data ... ``` ??? - You can make your own overlay filesystem and mount that into a container - This lets you have an unchanging base data, and extend it in one or more different volume mounts - Note the `o:` option is one long line --- name: volume-bind # Named Bind Mount ```no-highlight version: '3.7' volumes: bind-test: driver: local driver_opts: type: none o: bind device: /home/user/test services: app: volumes: - "bind-test:/test" ... ``` ??? - Named bind mount lets you initialize an empty directory on the host the contents of the image - Includes uid/gid and permissions of those files - Best of named mount + host mount - Data accessible, not in /var/lib/docker/volumes/... --- template: inverse # That's nice, but I just use:<br> $(pwd)/code:/code<br><br> ??? - For developers on their laptops, you're not doing NFS mounts - Let me stop you there, don't run that... --- template: inverse # That's nice, but I just use:<br> ~~$(pwd)/code:/code~~ <br> "$(pwd)/code:/code" ??? - If you use `$(pwd)` put it in quotes, otherwise a space in the path will give you weird errors - Developers don't want data coming out of containers, they want to mount their code into the container --- name: fix-perms # Fixing UID/GID ```no-highlight FROM openjdk:jdk as build *RUN useradd -m app *USER app COPY code /home/app/code RUN --mount=target=/home/app/.m2,type=cache \ mvn build CMD ["java", "-jar", "/home/app/app.jar"] ``` ??? - T: 32:00, [Skip to End](#thanks) - Going back to the Java example, lets see how the UID/GID issues impact us - Lets make our container more secure by configuring it to run as a non-root user --- # Fixing UID/GID ``` version: '3.7' volumes: m2: services: app: build: context: . target: build image: registry:5000/app/app:dev * command: "/bin/sh -c 'mvn build && java -jar app.jar'" volumes: * - ./code:/home/app/code * - m2:/home/app/.m2 ``` ??? - And lets make a developer compose file that mounts /code into the container with a command that rebuilds the jar every time we restart. --- # Fixing UID/GID ```no-highlight Error accessing /home/app/code: permission denied ``` -- - UID of `app` inside the container doesn't match developer's UID on the host ??? - When we try to run that, we often find that the UID inside the container doesn't match those on our laptop --- # Fixing UID/GID Possible solutions: - Run everything as root - Change permissions to 777 - Adjust each developers uid/gid to match image - Adjust image uid/gid to match developers - Change the container uid/gid from `run` or `compose` ??? - Lots of bad solutions that will result in: - Writing files as root or another user on your laptop - Making a different image per developer - Or still missing some edge cases (existing files owned by user in image) -- - **"... or we could use a shell script"** - Some Ops Guy --- template: inverse # Disclaimer The following slide may not be suitable for all audiences ??? - Those developers that are disturbed by shell scripts may want to turn away for this next slide --- # Fixing UID/GID ```no-highlight # update the uid if [ -n "$opt_u" ]; then * OLD_UID=$(getent passwd "${opt_u}" | cut -f3 -d:) * NEW_UID=$(ls -nd "$1" | awk '{print $3}') if [ "$OLD_UID" != "$NEW_UID" ]; then echo "Changing UID of $opt_u from $OLD_UID to $NEW_UID" * usermod -u "$NEW_UID" -o "$opt_u" if [ -n "$opt_r" ]; then * find / -xdev -user "$OLD_UID" -exec chown -h "$opt_u" {} \; fi fi fi ``` ??? - This is part of a `fix-perms` shell script I package into my base image - The first highlighted line gets the UID of the user inside the container - The second highlight gets the UID of the file or directory mounted as a volume - If those two UID's do not match, I change the container to match the host with the `usermod` - And after running that `usermod`, I run a `chown` on any files still owned by the old UID inside the container --- # Fixing UID/GID ```no-highlight FROM openjdk:jdk as build *COPY --from=sudobmitch/base:scratch / / *COPY entrypoint.sh /usr/bin/ *ENTRYPOINT ["/usr/bin/entrypointd.sh"] RUN useradd -m app USER app COPY code /home/app/code RUN --mount=target=/home/app/.m2,type=cache \ mvn build CMD ["java", "-jar", "/home/app/app.jar"] ``` ??? - I've packaged the above script and some other utilities into a base image that can be used to extend your image with a `COPY --from` - And then I included an entrypoint.sh script... --- # Fixing UID/GID ```no-highlight #!/bin/sh if [ "$(id -u)" = "0" ]; then fix-perms -r -u app -g app /code exec gosu app "$@" else exec "$@" fi ``` ??? - That entrypoint checks if I'm root, and if so, fixes the /code permissions to match the app container uid - Then I have this `exec gosu` that drops from `root` to the `app` user and runs the cmd - In prod where I don't run as root, and have matched the prod uid's to match the image, this gets skipped and I exec the command - The end result is the cmd is running as the user as pid 1, all evidence of the entrypoint is gone from the process list, making it transparent --- # Fixing UID/GID ```no-highlight version: '3.7' volumes: m2: services: app: build: context: . target: build image: registry:5000/app/app:dev command: "/bin/sh -c 'mvn build && java -jar app.jar'" * user: "0:0" volumes: - ./code:/home/app/code - m2:/home/app/.m2 ``` ??? - The developer compose file is the same as before with one addition, the user is set to root. - The production compose file wouldn't have any of this, use the release image with the JRE instead of JDK, and no other settings. - Prod will run as default app, with no volume mounts, build, or cmd. - Note when you restart the container, the app user has already been modified from before, so all the uid/gid changing steps get skipped on the second pass --- # Fixing UID/GID - Developers can run the same image and compose file on multiple systems - App runs with the developers individual uid/gid - Changes to `./code` are owned by the developer - Same image in prod can run without ever needing root ??? - The prod compose file wouldn't change the user, cmd, or volumes --- # Take A Breakout Survey Access your session and/or workshop surveys for the conference at any time by tapping the Sessions link on the navigation menu or block on the home screen. Find the session/workshop you attended and tap on it to view the session details. On this page, you will find a link to the survey. .center[.pic-50[]] --- layout: false class: title name: thanks # Thank You ### github.com/sudo-bmitch/presentations<br> github.com/sudo-bmitch/docker-base .left-column[ .pic-80[] ] .right-column[.content[.align-right[.no-bullets[ <br><br> - Brandon Mitchell - Twitter: @sudo_bmitch - GitHub: sudo-bmitch ]]]] ??? - That went fast, you can get the presentation from this QR code - You can look a the base image and a working example in my docker-base repo - I'll be in a hallway track talking about multi-stage and buildkit at 1PM - I'll take questions until time runs out - I'll be happy to discuss futher outside the doors after that